One Shot Learning

I’ve become interested in how we might be able to bridge the gap between deep learning and the learning that humans are capable of.

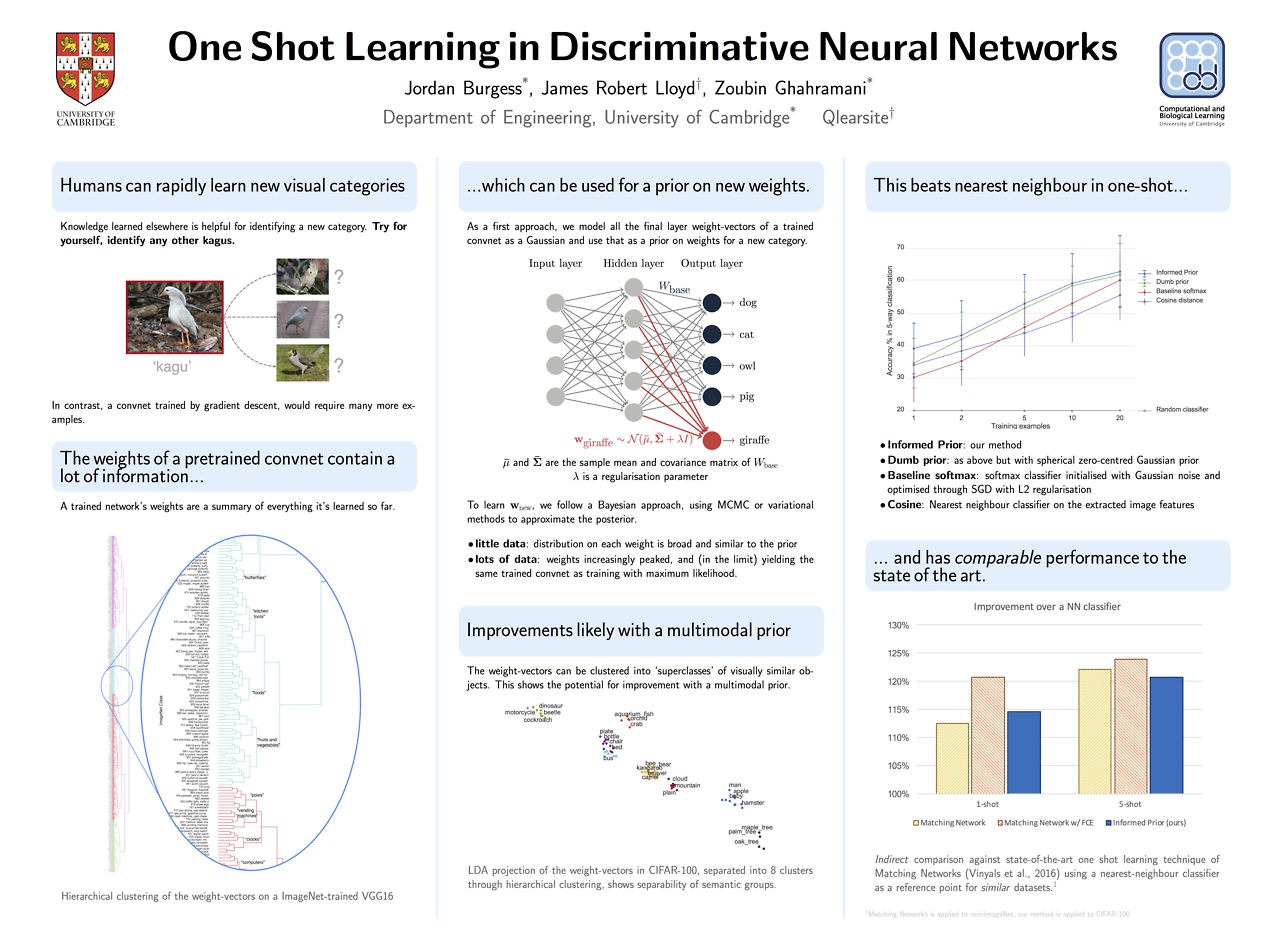

Deep learning continues to amaze me, but to train a classifier you typically need hundreds of examples — even if you use a pretrained network. I’ve been exploring how we could use the same architectures, but get the models to perform well with almost no training data.

I’ve got a paper and poster at NIPS in the workshop on Bayesian Deep Learning on an idea I explored with Zoubin Ghahramani. It’s remarkably simple and seems to get state-of-the-art performance. You take, say, a VGG16 trained on all 1000 ImageNet classes and freeze all but the last layer. You model the weight vectors to each of the 1000 softmax outputs as your favourite distribution (Gaussian) and use that as a prior on the distribution of any new weight vectors that need to be trained for any new classes. With each training example, you perform a Bayesian update on this weight distribution. This will be data efficient if you have only a few examples, and if you have a lot of data this weight distribution will converge on the point estimate you’d get with stochastic gradient ascent.

See my poster below and you can read the extended extract on arXiv.