Accelerating Progress

How different will the world be in 30 years? If we continue on our trajectory from the past 30, we may be only decades away from creating artificial intelligence vastly more powerful than humankind.

Creating AI would be the most important event of human history. Everything that civilisation has created, good and bad, has been the result of our intelligence. As technology acquires a mind of its own, and continues to expand, many predict we’ll enter a world where biological intelligence is no longer the dominant force in our universe. It sounds like science fiction, but should we take it seriously?

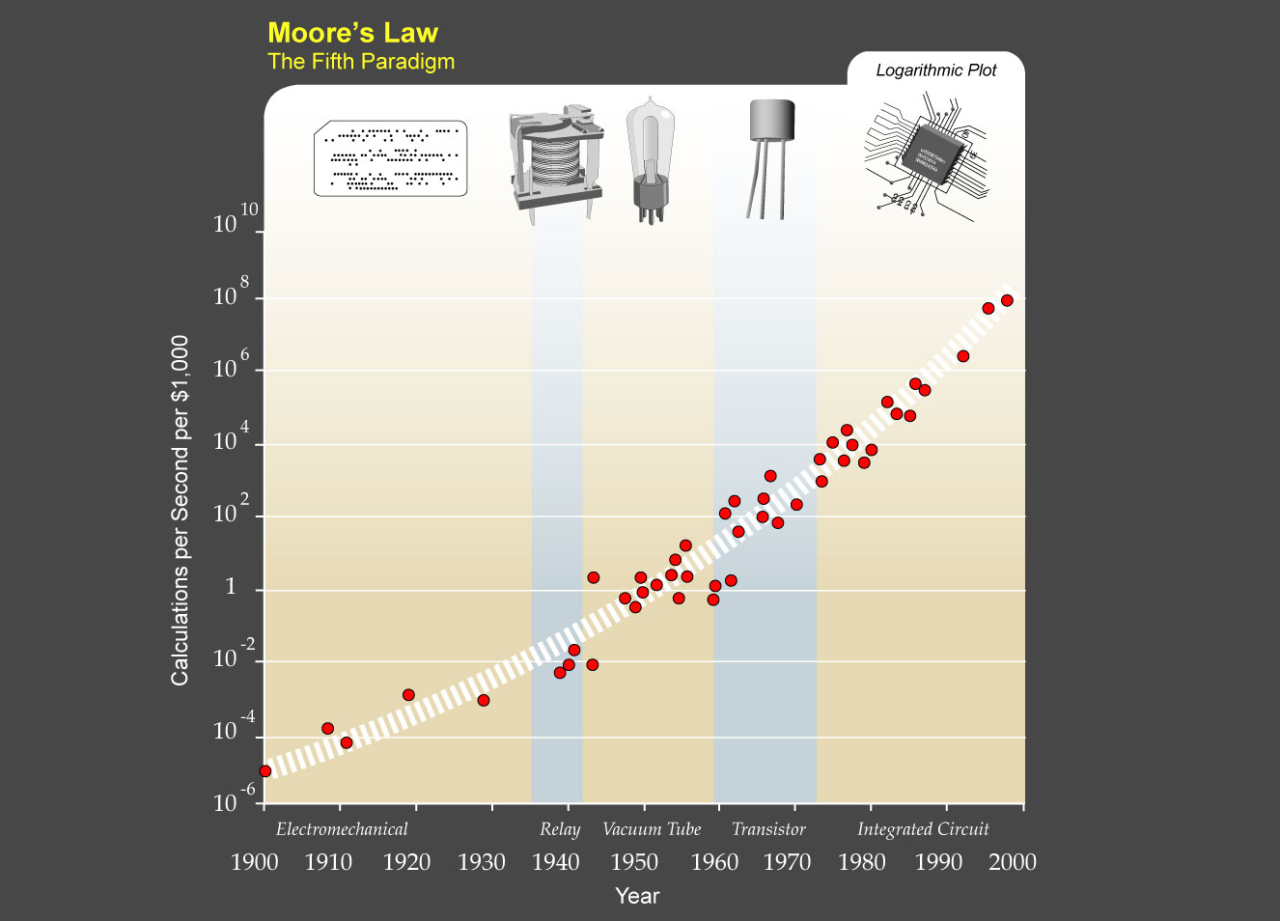

This prediction is based on extrapolating the exponential growth we have seen in information technologies. Moore’s law is one example, which states that the number of transistors on integrated circuits doubles approximately every two years. Over different time scales, the same is true of processing power, network bandwidth, storage capacity, brain scanning resolution and more. Importantly, the trend seems to extend beyond any one particular technology — the curve for calculations per second extends back to vacuum tubes and electro-mechanical devices.

The trend of exponential growth extends Moore’s law to earlier technologies and is expected to continue in to the future – Courtesy of Kurzweil Technologies, Inc. Creative Commons 1.0

Why should this be? Moore’s Law is far from physical law, merely an observation and prediction, but it has been extraordinarily prescient for almost 50 years. The basic explanation for it is that we can use the latest technology to extend the boundaries of what’s possible and create the next. Each generation can be made X% better and this compounds to produce the exponential change over time.

Is this exponential growth going to continue?

The predicted end date for Moore’s law has been continually pushed back. At every stage there have been multiple technical problems threatening its continuation. However, each time, (technology-assisted) human ingenuity has figured out a way around it in time.

Still, there will be an end to Moore’s law as we know it. Currently, that’s expected to be around 2023 when quantum tunnelling, photolithography limits or various other issues seem insurmountable.

However when one technology approaches its limit, the pressure (and funding) on alternatives increases. There are already several plausible candidates for this: 3D integrated circuits, alternative materials such as nanotubes DNA computing and quantum computers. The theoretically limits of computation with these technologies is many orders greater than what’s necessary for the profound change proposed.

As far as I can tell, there will always be vast economic rewards for progress in information technologies. There’s always a compelling advantage in being faster, smarter, cheaper or smaller than the competition whatever the circumstance. Progress has been unaffected by world war or global recession so I don’t expect we will reach an upper limit on computation any time soon.

Knowing the trend is one thing, comprehending its implications still makes you a visionary.

The computational power of the human brain is estimated at around 10^16 calculations per second. By the second half of the 2020s, this should be available for less than $1000. As brain scanning technologies mature in the next decade, we’re increasingly likely to find effective software model to run on top of this hardware capable of simulating a human brain1.

Ray Kurzweil expects a computer to consistently pass the Turing test by 2029. It’s worth appreciating just how soon that is. In order to pass the machine must have near-complete knowledge of natural language, culture, emotions and human behaviour. Within 15 years, the machine would appear to us as a conscious being.

Unlike our human brains, there’s very little to limit the capability of artificial intelligence. AIs will be capable of developing increasingly smarter AIs and it will quickly run away from us: artificial intelligence orders of magnitude greater than the combined human population; an event-horizon beyond which we can no longer predict; referred to as the singularity; 2045 AD.

Some people believe this will be a dystopia. With a vastly superior intelligence inhabiting Earth, we’ll be lucky if they keep humans as pets or historical artifacts. Others, such as Ray Kurzweil, paint a more optimistic scenario. Humanity is able to merge with the non-biological intelligence to form a post-human society.

There’s reason to believe this too. As computing devices have become smaller, cheaper and faster the separation between human and machine decreases. Computers have gone from something you visit in a lab, to something in your room, on your lap, in your pocket, and very soon something that you wear on your body. We’re already inseparable from our smartphones and instant internet access has begun to alter how we store information. As computers continue to shrink in size, networked devices will become part of our bodies, interfacing directly to our nervous system and blurring the distinction between the virtual and reality.

The future at its most banal. Source: Fernando Barbella, Signs from the Near Future

How you should react to these ideas

When I first came across these ideas I dismissed them as plausible-enough science fiction. Ideas well established in books and movies but not something that could possibly affect me in my lifetime. Now that I’ve read the history of these predictions my opinion has begun to change.

These concepts aren’t all that new, which may contribute to some peoples skepticism that they’ll ever happen. Back in 1950, Alan Turing popularised the idea of a thinking machine2 and in 1965 his colleague I. J. Good proposed the idea of an ‘intelligence explosion’ as result of compounding improvements. Vernon Vinge introduced the term Singularity in 1993 and he expects it to occur by 2030. Ray Kurzweil is the most visible proponent of these ideas, writing several books, producing a dubious movie and presenting many TED talks. The fact that, to many, Kurzweil wholly represents the Singularity is somewhat unfortunate as attacking him has become a proxy to attacking the ideas. I admire his work but I also find that his self-assured propheteering makes it hard to differentiate between optimistic techno-babble and justifiable scientific predictions.

Despite the logic of the arguments, it’s still difficult to believe all the implications. I can accept that things have changed a lot within the past 50 years, but it’s difficult to think that the future will be remarkably different from the generations before me.

This is probably a common reaction. People consistently underestimate how much they’ll change in the future despite acknowledging how much they’ve developed in the past. The same seems true of technological change. Everyone can appreciate the change from Atari to Oculus, or from fax to Snapchat, but few people believe we can go from Siri to Superhuman Intelligence in a similar timespan.

Generally, we struggle to comprehend ideas outside our level of abstraction. Our day-to-day model of the world doesn’t apply well things that are very small (sub atomic) or very large (galaxies or universes) and as a result we have almost no intuition about them. Similarly, we have no point of reference for intelligence greater than ourselves, which makes it difficult to accept this as a realistic possibility3.

Overall, I’ve come to expect that many of these predictions will come true, but with it taking longer that Kurzweil hopes4.

“When a scientist says something is possible, they’re probably underestimating how long it will take. But if they say it’s impossible, they’re probably wrong.” — Modification of Clarke’s First law by Richard Smalley

Should this affect how you live your life?

If you accept that some breakthrough representing the singularity will probably occur, how should that change how you live your life?

The first thing is to live long enough to experience it. Kurzweil seems quite determined to see it through, taking over 150 supplements in an attempt to live long enough to live forever. He‘s 66, so this is quite encouraging to anyone younger.

Make sure that we prepare appropriately for it. Once we create strong AI it’s seems unlikely that we’ll be able to undo the situation. Creating “friendly AI” from the beginning is crucial. Stephen Hawking recently wrote for the Independent calling for more serious research into this area:

“If a superior alien civilisation sent us a message saying, ‘We’ll arrive in a few decades,’ would we just reply, ‘OK, call us when you get here – we’ll leave the lights on’?” – Stephen Hawking

Although it’s seductive to view technological progress as inevitable, there’s still an enormous amount of work that needs to be done. Now’s a good time to work in Human–Computer Interfaces, VR, nanotechnology, prosthesis, biotechnology and AI.

Invest your time in technology. At some point computers won’t need to be explicitly instructed and they’ll be able understand the vagueness of human requests. This isn’t going to happen until strong AI, so until then, learning how computers work will put you on the favourable side of the digital divide.

Invest money in technology stocks5. These breakthroughs will create huge wealth and that’s likely to accrue to the big tech companies. Investing in their stock is one of the least effort way to share in that. Google, which has “less than 50 percent but certainly more than 5 percent” of the world’s leading experts in machine learning (”Source) is probably not a terrible choice.

Once you start thinking about technological progress in this context, every day you will see evidence of how things are changing. There’s good reasons for being excited about the future as it could be very different indeed.

Finished Singularity is Near.

— Jordan Burgess (@jordnb) May 23, 2014

Such a radical road map for the future. Trying to balance my excitement with my fear of drinking the kool-aid.

- Initially, research into creating AI centered on ‘expert systems’, programming a sufficient number of rules to handle each and every input case. That was successful only in very narrow fields of expertise. Now, there is a renewed expectation around generalised algorithms based on chaotic statistical models such as neural networks and reason to believe that learning comes from only a single algorithm. [return]

- Alan Turing put the computational power of the human brain at around 10^10 cps. He also (in 1950) expected that a computer would pass the Turing test about 30% of the time by the year 2000. The 10^10 computational power was achieved for about $100,000 in 2000, so he was close about the technological progress but underestimated the complexity of the brain or the stupidness of humans. [return]

- Greater than human-intelligence also challenges the anthropocentric belief that human beings are the most important species in existence. Similarly to ideas like the earth not being at the centre of the universe, or that humans evolved from apes, this is a difficult idea to let go of. [return]

- Kurzweil’s predictions are actually based on a double-exponential curve — that the rate of progress is itself speeding up. There’s evidence to show that this has been the case historically but extrapolating that out into the future seems overly optimistic. [return]

- I’m not qualified to offer financial advice, so don’t just blindly follow what someone has said in a blog. [return]